Bryan Blette

Assessing Treatment Effect Heterogeneity in the Presence of Missing Effect Modifier Data in Cluster-Randomized Trials

Presenter

Bryan Blette is a Postdoctoral Fellow at the Center for Causal Inference and DBEI. He completed his Ph.D. in Biostatistics from UNC - Chapel Hill in 2021. His main methodological interests are in causal inference, measurement error, and Bayesian methods.

Abstract

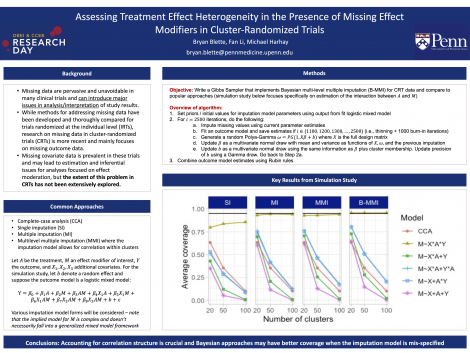

Understanding whether and how treatment effects vary across individuals is crucial to inform clinical practice and recommendations. Accordingly, the assessment of heterogeneous treatment effects (HTE) based on pre-specified potential effect modifiers has become a common goal in modern randomized trials. However, when one or more potential effect modifiers are missing, complete-case analysis may lead to bias, under-coverage, inflated type I error, or low power. While statistical methods for handling missing data have been proposed and compared for individually randomized trials with missing effect modifiers, few guidelines exist for the cluster-randomized setting, where intracluster correlations in the effect modifiers, outcomes, or even missingness mechanisms may introduce further threats to accurate assessment of HTE. In this paper, the performance of various missing data methods are neutrally compared in a simulation study of cluster-randomized trials with missing effect modifier data, and a Bayesian multilevel multiple imputation approach is proposed and evaluated. Thereafter, we impose controlled missing data scenarios to potential effect modifiers from the Work, Family, and Health Study to illustrate the proposed missing data method.

Keywords

Cluster Randomized Trials, Effect Modification, Heterogeneous Treatment Effects, Missing Data, Multilevel Data, Multiple ImputationAbout Us

To understand health and disease today, we need new thinking and novel science —the kind we create when multiple disciplines work together from the ground up. That is why this department has put forward a bold vision in population-health science: a single academic home for biostatistics, epidemiology and informatics.

© 2023 Trustees of the University of Pennsylvania. All rights reserved.. | Disclaimer

Comments

What was the sample size and/or average cluster size in your simulations? Can you provide intuition on why the coverage decreases with increasing number of clusters in the mis-specified models?